Take Your Conversational AI Offline: A Step-by-Step Guide to Installing Ollama and a GUI

The rise of conversational AI has revolutionized the way we interact with technology, enabling us to have more natural and human-like conversations. However, one significant limitation of these AI models is that they often require an internet connection to function, which can be a major drawback for users who want to access them offline or in areas with limited connectivity.

Fortunately, there's a solution: Ollama, an open-source project that allows you to run a conversational AI model directly on your machine. By installing Ollama and a user-friendly GUI, you can enjoy the benefits of a ChatGPT-like experience without the need for an internet connection. In this guide, we'll walk you through the process of setting up Ollama and a GUI on your device, so you can start chatting with an offline AI model today.

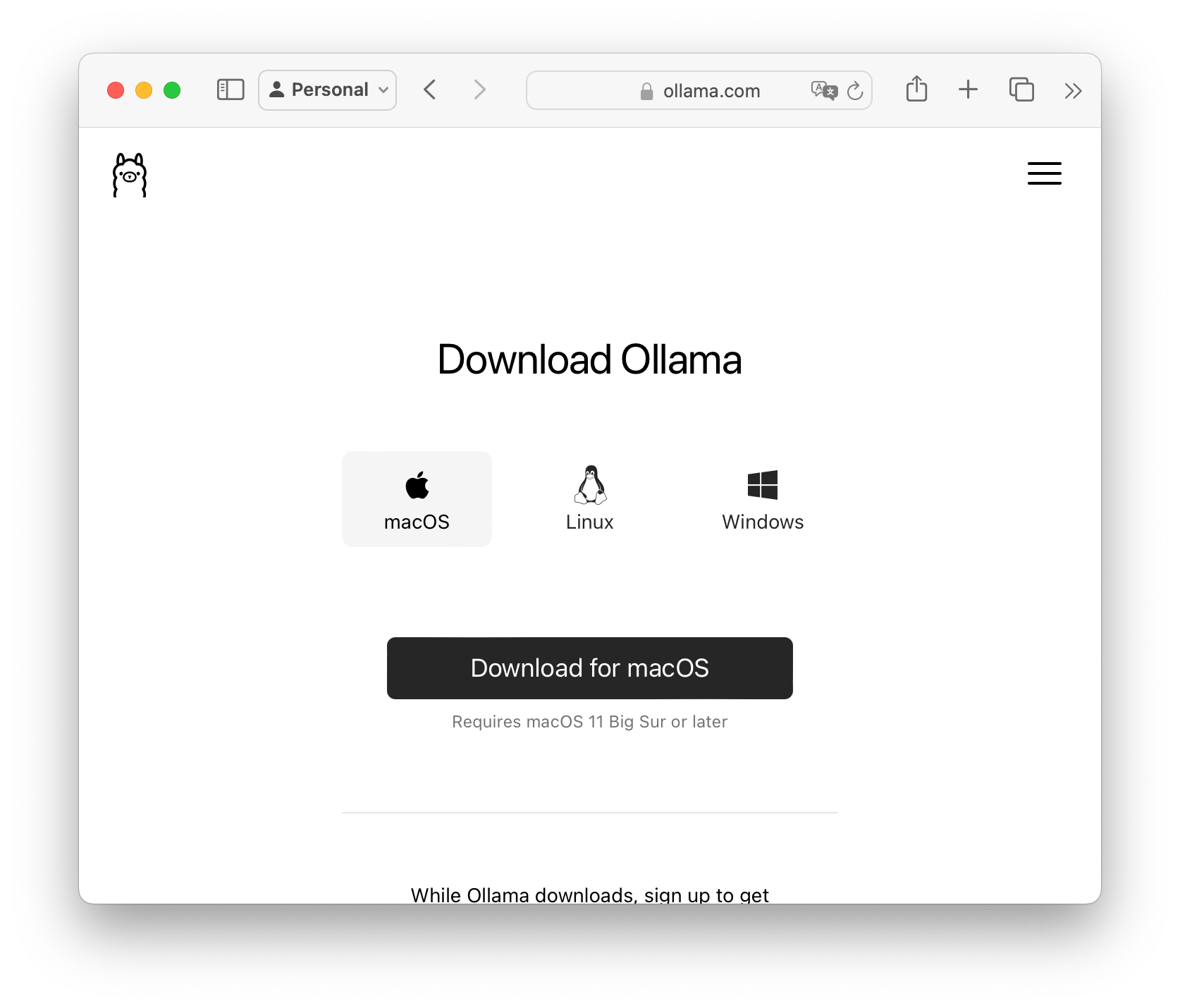

Step 1: Downloading Ollama

The first step is to download the Ollama software from the official website: https://www.ollama.com/download. This will give you access to the necessary files required for installation.

Step 2: Installing Docker

To run Ollama, you'll need to have Docker installed on your machine. You can download the latest version of Docker from the official website: https://www.docker.com/. The installation process is straightforward and should only take a few minutes to complete.

Step 3: Running Open-WebUI

Once you have Docker installed, it's time to run Open-WebUI, a GUI for interacting with Ollama. To do this, open your terminal and run the following command:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always -e WEBUI_AUTH=False ghcr.io/open-webui/open-webui:main

This command will start a new Docker container running Open-WebUI.

Step 4: Accessing the GUI

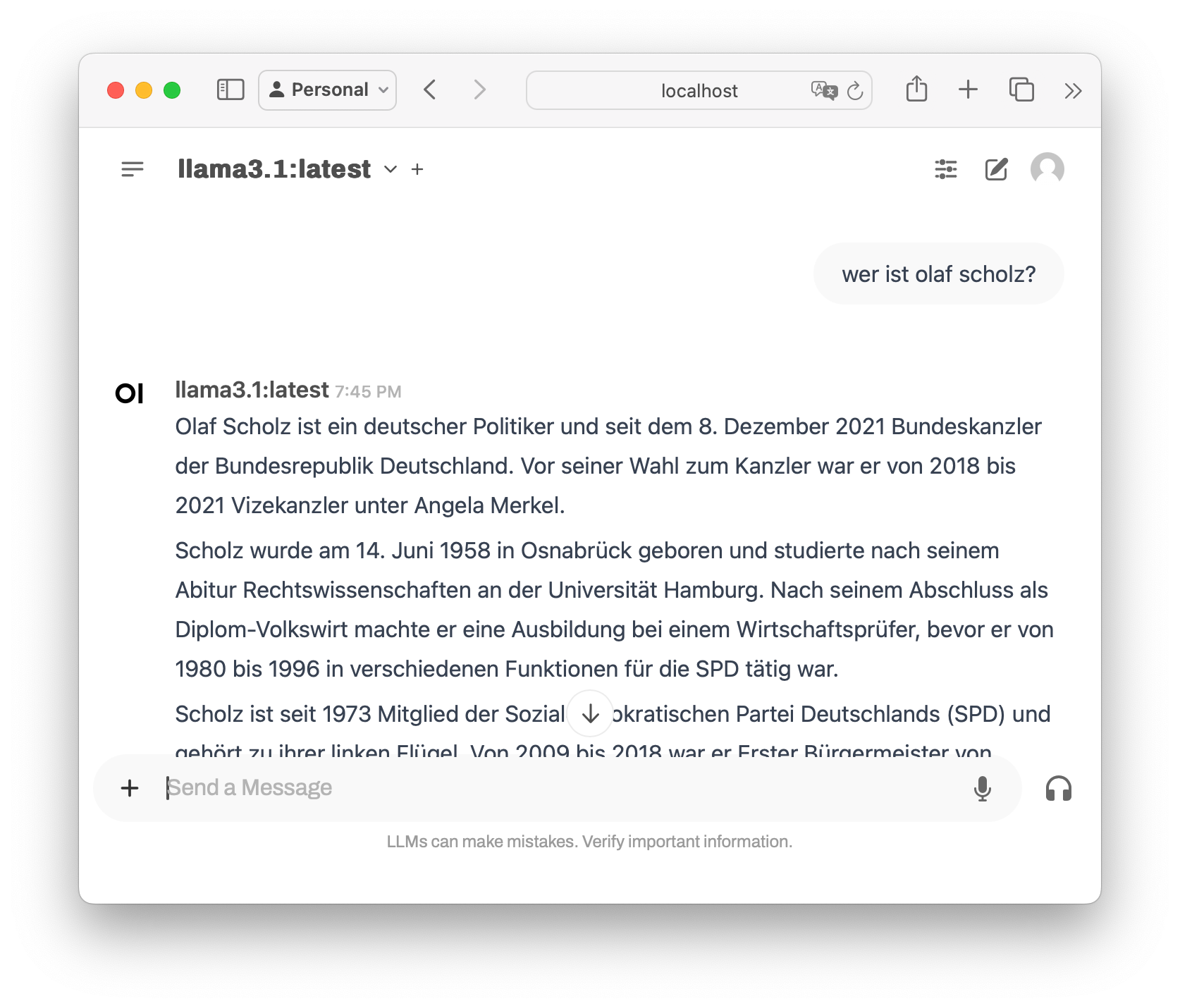

With Open-WebUI running, you can now access the GUI by visiting http://localhost:3000 in your web browser. You'll be presented with a user-friendly interface where you can search for and interact with various AI models.

Step 5: Searching for Llama3.1

To start chatting with an offline AI model, search for "llama3.1:latest" within the GUI. This will give you access to a state-of-the-art conversational AI model that can engage in natural-sounding conversations.

And that's it! You now have Ollama and a GUI running on your machine, allowing you to interact with an offline AI model at your convenience.